Mathematics

Cross Entropy : An intuitive explanation with Entropy and KL-Divergence

Cross Entropy : An intuitive explanation with Entropy and KL-Divergence

on

01 Jun 2020

by

Ramana Reddy Sane

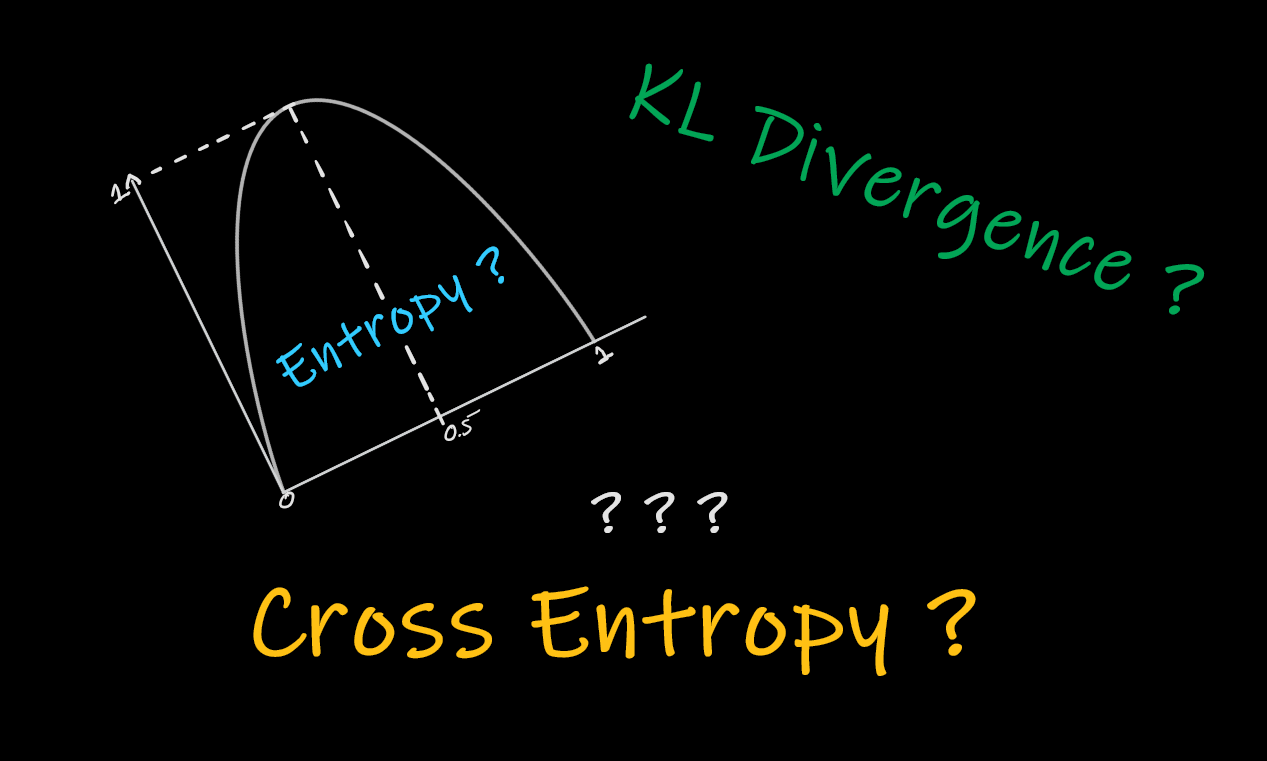

Cross-Entropy is something that you see over and over in machine learning and deep learning. This article explains it from Information theory prespective and try to connect the dots. KL-Divergence is also very important and is used in Decision Trees and generative models like Variational Auto Encoders.

©2021 designed and coded by

Ramana Reddy